Print

Print

- Advanced Technology Laboratories

Achieved the world’s No.1 in Visual Commonsense Reasoning

April 18, 2022

KDDI Research, Inc.

KDDI Research, Inc. (Head office: Fujimino, Saitama; herein referred to as “KDDI Research”), along with Professor Jure Leskovec of Stanford University, achieved the world’s No.1 accuracy as of April 8, 2022 in Visual Commonsense Reasoning (VCR*1), which includes cutting-edge companies and the world's leading universities in AI research domain.

[Background]

In recent years, AI technology utilizing deep learning has made great progress in the field of image recognition, and is widely applied to face recognition and autonomous driving. However, advanced image understanding that can be inferred by going into the commonsense related to the content of images has not been realized. The realization of an AI system that combines commonsense has become an issue in the application of fully automated driving and domestic robots, etc. which require high reliability and easy understanding for humans.

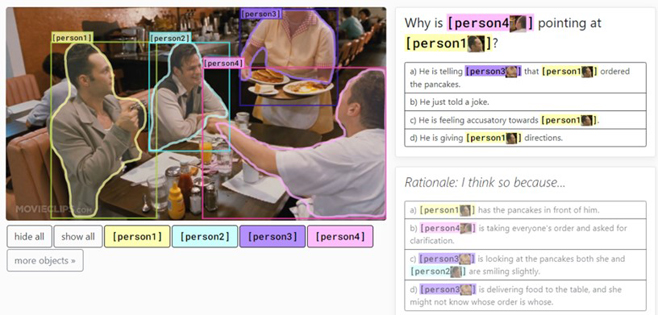

[Overview of VCR]

The VCR aims to realize an AI system that goes beyond conventional image recognition technology and makes it possible to infer human commonsense and judgment grounds. Specifically, when given a question and answer choices for an image, the task is to simultaneously choose the correct answer and the rationale behind the answer.

Fig: An example of VCR task

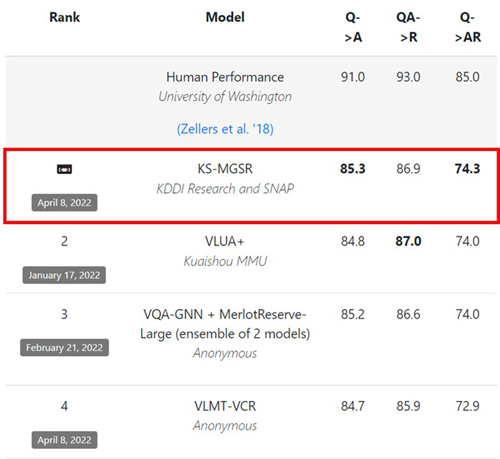

[Achievements]

The AI system KS-MGSR (KDDI SNAP Multimodal Graph based Sematic Reasoner) jointly developed by KDDI Research and Stanford University derives the correct answer and the basis for its judgment based on the image and the question and answer sentences for the image. In addition to constructing the commonsense necessary for this as a multimodal knowledge graph*2, we have made it possible to infer correct answers and the rationale behind the answers with our original graph neural network (GNN*3) model. With KS-MGSR, we achieved the world's highest accuracy of 74.3% in the VCR subtask (Q→AR).

Fig: VCR Leaderboard

[Future activities]

In order to realize AI systems that have commonsense, we will expand the target visual information from images to videos, and work on further advancement of algorithms and generalization for expansion of application areas.

<Activities at KDDI Research>

KDDI and KDDI Research formulated “KDDI Accelerate 5.0”, based on a concept for a next-generation society envisioned for 2030, and summarized how it can be accomplished in the “Beyond 5G/6G White Paper” in terms of the “Future Image” and “Technologies” required to create an ecosystem that spurs innovation. To develop a new lifestyle, the two companies will continue to invest in R&D on seven different technologies and on an orchestration technology that links them together. The results presented in this study correspond to one of the seven technologies of the “AI”. KDDI Research has established the Human-Centered AI Research Institute in April 2022 to promote research and development of technologies in which humans and AI symbiosis and grow together through interaction.

<Activities at SNAP lab*4 of Stanford University>

The SNAP lab led by prof. Leskovec conducts research on modeling technology by machine learning for structured data such as complex networks and graphs. The group works on a wide range of fields such as commonsense reasoning, recommendation systems, social sciences, and drug discovery, with the aim of establishing machine learning methods that can be applied to systems of all scales, from interactions of protein in a cell to social interactions.

(*1) Visual Commonsense Reasoning

(*2) A set of knowledge that represents the connection of human knowledge extracted from each modal (e.g., image and text) graph structure.

(*3) Deep learning method that handles graph data consisting of nodes and edges

(*4) Stanford Network Analysis Project

※The information contained in the articles is current at the time of publication.Products, service fees, service content and specifications, contact information, and other details are subject to change without notice.