Print

Print

- Advanced Technology Laboratories

World’s first real-time encoder development for point-cloud-compression compliant with the latest international standard.

~ To transmit an ultra-realistic 3D video for use in a metaverse ~

October 24, 2022

KDDI Research, Inc.

KDDI Research, Inc. (head office at Fujimino-shi, Saitama; Hajime Nakamura, President and CEO) announced today that it has successfully developed the world's first (Note 1) real-time encoder compliant with Video-based Point Cloud Compression (V-PCC), the latest international standard for point cloud data compression (Note 2). This technology makes it possible to significantly reduce the amount of data without compromising the visual quality of the person's point cloud, allowing efficient real-time transmission via a mobile network. Micro-motions, such as facial expressions and gestures, are represented with ultra-reality because of high-definition point clouds. This technology will connect the real to the virtual, and is expected to be used in future show events as a metaverse application.

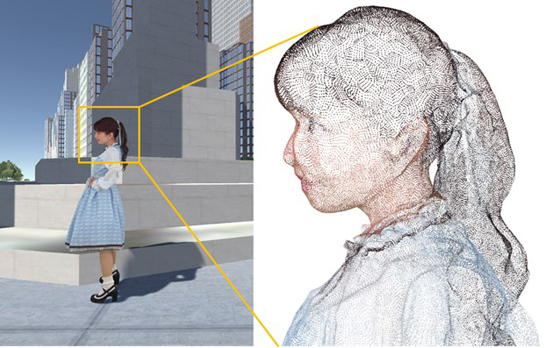

Figure 1: An example of point cloud data

【Background】

In recent years, many volumetric studios (Note 3) have opened and are operating. In these studios, content production using point clouds, which are high-definition 3D data with ultra-reality, has become popular; however, the data size of this content is enormous. At the same time, various terminal types are used for point-cloud content viewing, such as PCs, tablets, smartphones, and head-mounted displays. Under these circumstances, a comfortable and high-quality viewing experience using various terminals is expected to be realized by reducing the point cloud's data while maintaining the visual quality. Conventional compression technology, however, requires more than 50 Mbps, and it is difficult to transmit stably in this bandwidth via a mobile network, thus, further reductions in size are necessary.

KDDI Research has been involved in international standardization for V-PCC since 2020, having accumulated the technologies and knowledge for practical application enabled by V-PCC. V-PCC is appropriate for dynamic 3D point clouds, such as those representing the human body. These can be used in content distribution like live commerce with photorealistic human representation. Compared to uncompressed data, V-PCC can reduce the amount of data by 1/40 while maintaining quality, which is double the performance of conventional technologies. Consequently, V-PCC can halve the bitrate of 50 Mbps mentioned above, making transmission via a mobile network possible.

On the other hand, because the compression load is high, this makes real-time processing difficult. For example, to provide an ultra-realistic viewing experience at various show events requires over 20 million points per second (1.0 Gbps) as a point cloud.

【Achievement】

KDDI Research has successfully developed a V-PCC-compliant real-time encoder as software implementation. The two new technologies have made possible a speed that is approximately 400 times faster, making a real-time encoder a reality.

1. High-speed conversion technology of point clouds to the legacy video format.

2. Task scheduling technology for the V-PCC framework to improve CPU usage.

* Detailed information is available in the appendix.

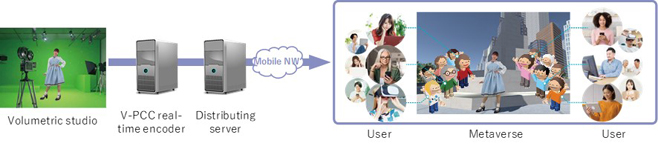

As a result, the point cloud is efficiently transmitted in real-time via a mobile network without compromising the visual quality. This real-time point-cloud transmission is expected to create a new experience for music and fashion events, i.e., filming at a volumetric studio will be able to appear simultaneously in metaverse applications.

Figure 2: An example of the viewing experience of show events in the metaverse.

【Future vision】

KDDI Research will further develop live transmission systems and demo applications on smartphones and VR devices to promote the distribution of the ultra-realistic point cloud.

This work was a unique initiative of KDDI Research, utilizing the results of research and development which had been supported by “Strategic Information and Communications R&D Promotion Programme (SCOPE)” of Ministry of Internal Affairs and Communications, Grant no. JPJ000595.

【KDDI Research’s Initiatives】

KDDI and KDDI Research formulated “KDDI Accelerate 5.0” (Japanese only), based on a concept for the next-generation society envisioned for 2030, and summarized how it can be accomplished in the “Beyond 5G/6G Whitepaper” in terms of the “Future Image” and “Technologies” required to create an ecosystem that spurs innovation.

To develop a new lifestyle, the two companies will continue to invest in R&D on seven different technologies and on an orchestration technology that links them together. The results presented in this study correspond to one of the seven technologies, “XR”

[Note]

1. A point cloud is a data format that represents a 3D object as a set of XYZ and RGB information. Video-based point cloud compression (V-PCC) was standardized by ISO/IEC/JTC 1/SC29/WG11 (MPEG) in October 2020.

2. This is the first time a V-PCC-compliant real-time encoder has been achieved. (As of October 21, 2022, KDDI Research survey).

3. A shooting studio that installs many cameras around a subject and creates point cloud content from video filmed from various angles.

【Supplement】

Encoder: The system is responsible for point cloud coding. Point cloud coding is a compression method used to transmit and store point clouds with limited bandwidth and capacity. It reduces the amount of data by using similarity within and between images or point locations.

Decoder: The system receives the compressed data output from the encoder and decodes it into a point cloud. Preparation of a decoding process corresponding to each point cloud coding standard is required, and interoperability across different coding standards is generally not guaranteed.

Real-time encoder: An encoder can complete the encoding process for a point cloud at a speed of 30 fps (frames per second) or higher. This is essential for live video transmission.

(Appendix)

【Technical details】

(1) High-speed conversion technology of point clouds to the legacy video format.

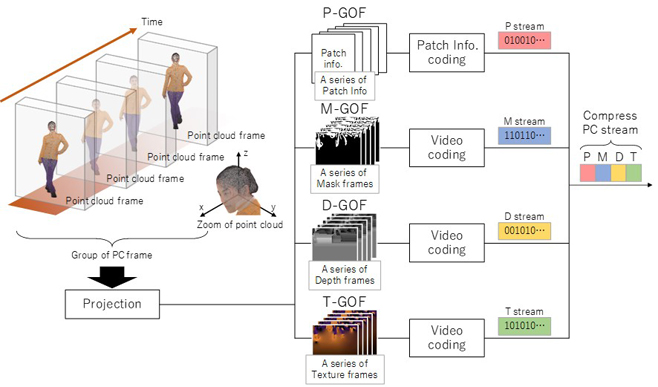

V-PCC decomposes a frame group of the point cloud into patches, projects them onto a 2D image, and converts them into legacy video frames. The conversion generates four types of information/frames, such as patch information, texture, depth, and mask frames, simultaneously allowing the decoder to reconstruct the point cloud. All frames are applied to a legacy video encoder to generate bitstreams. Conventionally, it is required to determine which 2D plane the approximately 0.8 million points that make up the 3D point cloud in a given frame should be projected onto for each of the several points, which requires an enormous processing time.

In this development, we introduce high-speed technology that divides the 3D space into smaller spaces than a patch containing many points. An appropriate plane is then determined for each small area. Furthermore, our deep knowledge of video compression is applied to the conversion process of texture and depth frames to reduce the processing time without compromising compression performance. By combining these methods, we have achieved a 20-fold increase in speed compared to the conventional approach.

Figure 3: Overview of point-cloud-compression data flow.

(2) Task scheduling technology for the V-PCC framework to improve CPU usage.

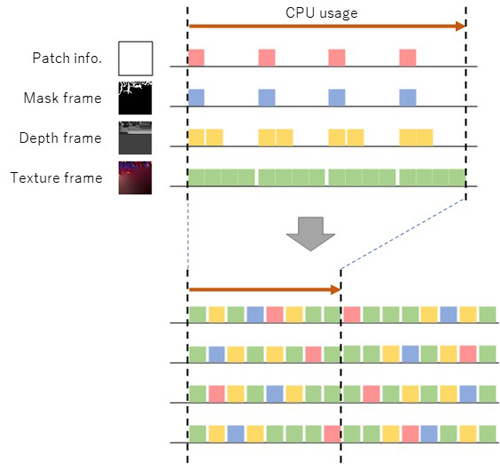

Parallelization implementation is an essential technique to take advantage of CPU performance in real-time processing on PC software. Existing software-based video encoders such as High Efficiency Video Coding (HEVC) and Versatile Video Coding (VVC) is parallelized on each coding tree block (CTB), which is the building block of a frame. The CTB-based task scheduling technology for multi-core CPUs is introduced to parallelize the CTB processing over multiple frames, so the CPU usage ratio becomes an ideal condition Here, V-PCC handles heterogeneous frame types, which constrains their processing order, as shown in Figure 3. For instance, when the conventional CTB-based task scheduler is applied for each frame type, the CPU usage ratio is illustrated in the upper part of Figure 4. This figure clearly shows that the usage ratio of each CPU core is unequaled, leaving room for further improvement in speed.

In this development, we introduce a new task scheduling technology that supports task dispatch across different frame types, taking into account the frame-level processing between frame types and the processing load of each frame type. As shown in the lower part of Figure 4, we have achieved a 20-fold increase in speed because the CPU usage ratio becomes the ideal condition.

Figure 4: An example of CPU usage ratio improvement.

※The information contained in the articles is current at the time of publication.Products, service fees, service content and specifications, contact information, and other details are subject to change without notice.